I was reading a blog post about the testing strategy for the Wild linker, when I came upon a link to cargo-mutants, a mutation testing tool for Rust. The tool promised to be easy to set up, so I gave it a try. I'm happy to find that it totally delivers!

Briefly: mutation testing catches cases where bugs are deliberately inserted in the source code, but the test suite fails to catch them: after making the incorrect changes, all the tests still pass. This indicates a gap in the test suite.

Previously I had only seen mentions of "mutation testing" in passing, as something exotic to be done when testing compilers. I don't recall seeing it as a general tool; maybe I have not been looking closely enough.

Setup and running

Setting up cargo-mutants is easy enough: you can cargo install

cargo-mutants and run it with cargo mutants.

For librsvg this ran for a few hours, but I discovered a couple of

things related to the way the librsvg repository is structured. The

repo is a cargo workspace with multiple crates: the librsvg

implementation and public Rust API, the rsvg-convert binary, and

some utilities like rsvg-bench.

-

By default

cargo-mutantsonly seemed to pick up the tests forrsvg-convert. I think it may have done this because it is the only binary in the workspace that has a test suite (e.g.rsvg-benchdoes not have a test suite). -

I had to run

cargo mutants --package librsvgto tell it to consider the test suite for thelibrsvgcrate, which is the main library. I think I could have usedcargo mutants --workspaceto make it run all the things; maybe I'll try that next time.

Initial results

My initial run on rsvg-covert produced useful results; cargo-mutants

found 32 mutations in the rsvg-convert source code that ought to

have caused failures, but the test suite didn't catch them.

The second run, on the librsvg crate, took about 10 hours. It is

fascinating to watch it run. In the end it found 889 mutations with

bugs that the test suite couldn't catch:

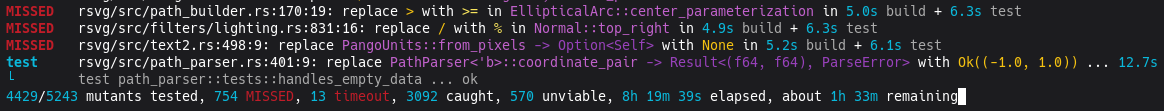

5243 mutants tested in 9h 53m 15s: 889 missed, 3663 caught, 674 unviable, 17 timeouts

What does that mean?

-

5243 mutants tested: how many modifications were tried on the code. -

889 missed: The important ones: after a modification was made, the test suite failed to catch this modification. -

3663 caught: Good! The test suite caught these! -

674 unviable: These modifications didn't compile. Nothing to do. -

17 timeouts: Worth investigating; maybe a function can be marked to be skipped for mutation.

Starting to analyze the results

Due to the way cargo-mutants works, the "missed" results come in an arbitrary order, spread among all the source files:

rsvg/src/path_parser.rs:857:9: replace <impl fmt::Display for ParseError>::fmt -> fmt::Result with Ok(Default::default())

rsvg/src/drawing_ctx.rs:732:33: replace > with == in DrawingCtx::check_layer_nesting_depth

rsvg/src/filters/lighting.rs:931:16: replace / with * in Normal::bottom_left

rsvg/src/test_utils/compare_surfaces.rs:24:9: replace <impl fmt::Display for BufferDiff>::fmt -> fmt::Result with Ok(Default::default())

rsvg/src/filters/turbulence.rs:133:22: replace - with / in setup_seed

rsvg/src/document.rs:627:24: replace match guard is_mime_type(x, "image", "svg+xml") with false in ResourceType::from

rsvg/src/length.rs:472:57: replace * with + in CssLength<N, V>::to_points

So, I started by sorting the missed.txt file from the results. This is much better:

rsvg/src/accept_language.rs:136:9: replace AcceptLanguage::any_matches -> bool with false

rsvg/src/accept_language.rs:136:9: replace AcceptLanguage::any_matches -> bool with true

rsvg/src/accept_language.rs:78:9: replace <impl fmt::Display for AcceptLanguageError>::fmt -> fmt::Result with Ok(Default::default())

rsvg/src/angle.rs:40:22: replace < with <= in Angle::bisect

rsvg/src/angle.rs:41:56: replace - with + in Angle::bisect

rsvg/src/angle.rs:49:35: replace + with - in Angle::flip

rsvg/src/angle.rs:57:23: replace < with <= in Angle::normalize

With the sorted results, I can clearly see how cargo-mutants gradually does its modifications on (say) all the arithmetic and logic operators to try to find changes that would not be caught by the test suite.

Look at the first two lines from above, the ones that refer to AcceptLanguage::any_matches:

rsvg/src/accept_language.rs:136:9: replace AcceptLanguage::any_matches -> bool with false

rsvg/src/accept_language.rs:136:9: replace AcceptLanguage::any_matches -> bool with true

Now look at the corresponding lines in the source:

... impl AcceptLanguage {

135 fn any_matches(&self, tag: &LanguageTag) -> bool {

136 self.iter().any(|(self_tag, _weight)| tag.matches(self_tag))

137 }

... }

}

The two lines from missed.txt mean that if the body of this

any_matches() function were replaced with just true or false,

instead of its actual work, there would be no failed tests:

135 fn any_matches(&self, tag: &LanguageTag) -> bool {

136 false // or true, either version wouldn't affect the tests

137 }

}

This is bad! It indicates that the test suite does not check that this function, or the surrounding code, is working correctly. And yet, the test coverage report for those lines shows that they are indeed getting executed by the test suite. What is going on?

I think this is what is happening:

- The

librsvgcrate's tests do not have tests forAcceptLanguage::any_matches. - The

rsvg_convertcrate's integration tests do have a test for its--accept-languageoption, and that is what causes this code to get executed and shown as covered in the coverage report. - This run of cargo-mutants was just for the

librsvgcrate, not for the integratedlibrsvgplusrsvg_convert.

Getting a bit pedantic with the purpose of tests, rsvg-convert assumes

that the underlying librsvg library works correctly. The library

advertises support in its API for matching based on AcceptLanguage,

even though it doesn't test it internally.

On the other hand, rsvg-convert has a test for its own

--accept-language option, in the sense of "did we implement this

command-line option correctly", not in the sense of "does librsvg

implement the AcceptLanguage functionality correctly".

After adding a little unit test for AcceptLanguage::any_matches in

the librsvg crate, we can run cargo-mutants just for that the

accept_language.rs file again:

# cargo mutants --package librsvg --file accept_language.rs

Found 37 mutants to test

ok Unmutated baseline in 24.9s build + 6.1s test

INFO Auto-set test timeout to 31s

MISSED rsvg/src/accept_language.rs:78:9: replace <impl fmt::Display for AcceptLanguageError>::fmt -> fmt::Result with Ok(Default::default()) in 4.8s build + 6.5s test

37 mutants tested in 2m 59s: 1 missed, 26 caught, 10 unviable

Great! As expected, we just have 1 missed mutant on that file now.

Let's look into it.

The function in question is now <impl fmt::Display for

AcceptLanguageError>::fmt, an error formatter for the

AcceptLanguageError type:

impl fmt::Display for AcceptLanguageError {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

match self {

Self::NoElements => write!(f, "no language tags in list"),

Self::InvalidCharacters => write!(f, "invalid characters in language list"),

Self::InvalidLanguageTag(e) => write!(f, "invalid language tag: {e}"),

Self::InvalidWeight => write!(f, "invalid q= weight"),

}

}

}

What cargo-mutants means by "replace ... -> fmt::Result with

Ok(Default::default()) is that if this function were modified to

just be like this:

impl fmt::Display for AcceptLanguageError {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

Ok(Default::default())

}

}

then no tests would catch that. Now, this is just a formatter function;

the fmt::Result it returns is just whether the underlying call to

write!() succeeded. When cargo-mutants discovers that it can change this function to return

Ok(Default::default()) it is because fmt::Result is defined as

Result<(), fmt::Error>, which implements Default because the unit

type () implements Default.

In librsvg, those AcceptLanguageError errors are just surfaced as

strings for rsvg-convert, so that if you give it a command-line

argument with an invalid value like --accept-language=foo, it will

print the appropriate error. However, rsvg-convert does not make any

promises as to the content of error messages, so I think it is

acceptable to not test this error formatter — just to make sure it

handles all the cases, which is already guaranteed by its match

statement. Rationale:

-

There already are tests to ensure that the error codes are computed correctly in the parser for

AcceptLanguage; those are theAcceptLanguageError's enumeration variants. -

There is a test in rsvg-convert's test suite to ensure that it detects invalid language tags and reports them.

For cases like this, cargo-mutants allows marking code to be

skipped. After marking this fmt implementation with

#[mutants::skip], there are no more missed mutants in

accept_language.rs.

Yay!

Understanding the tool

You should absolutely read "using results" in the cargo-mutants documentation, which is very well-written. It gives excellent suggestions for how to deal with missed mutants. Again, these indicate potential gaps in your test suite. The documentation discusses how to think about what to do, and I found it very helpful.

Then you should read about genres of mutants. It tells you

the kind of modifications that cargo-mutants does to your code. Apart

from changing individual operators to try to compute incorrect

results, it also does things like replacing whole function bodies to

return a different value instead. What if a function returns

Default::default() instead of your carefully computed value? What

if a boolean function always returns true? What if a function that

returns a HashMap always returns an empty hash table, or one full

with the product of all keys and values? That is, do your tests

actually check your invariants, or your assumptions about the shape of

the results of computations? It is really interesting stuff!

Future work for librsvg

The documentation for cargo-mutants suggests how to use it in CI, to ensure that no uncaught mutants are merged into the code. I will probably investigate this once I have fixed all the missed mutants; this will take me a few weeks at least.

Librsvg already has the gitlab incantation to show test coverage for patches in merge requests, so it would be nice to know if the existing tests, or any new added tests, are missing any conditions in the MR. That can be caught with cargo-mutants.

Hackery relevant to my tests, but not to this article

If you are just reading about mutation testing, you can ignore this section. If you are interested in the practicalities of compilation, read on!

The source code for the librsvg crate uses a bit of conditional

compilation to select whether to export functions that are used by the

integration tests as well as the crate's internal tests. For example,

there is some code for diffing two images, and this is used when

comparing the pixel output of rendering an SVG to a reference image.

For historical reasons, this code ended up in the main library, so

that it can run its own internal tests, but then the rest of the

integration tests also use this code to diff images. The librsvg

crate exports the "diff two images" functions only if it is being

compiled for the integration tests, and it doesn't export them for a

normal build of the public API.

Somehow, cargo-mutants didn't understand this, and the integration

tests did not build since the cargo feature to select that

conditionally-compiled code... wasn't active, or something. I tried

enabling it by hand with something like cargo mutants --package

librsvg -- --features test-utils but that still didn't work.

So, I hacked up a temporary version of the source tree just for mutation testing, which always exports the functions for diffing images, without conditional compilation. In the future it might be possible to split out that code to a separate crate that is only used where needed and never exported. I am not sure how it would be structured, since that code also depends on librsvg's internal representation of pixel images. Maybe we can move the whole thing out to a separate crate? Stop using Cairo image surfaces as the way to represent pixel images? Who knows!